Perception and Action in Dynamic Environments

Towards Physically Predictive Models of Reality from Ego-Centric Data

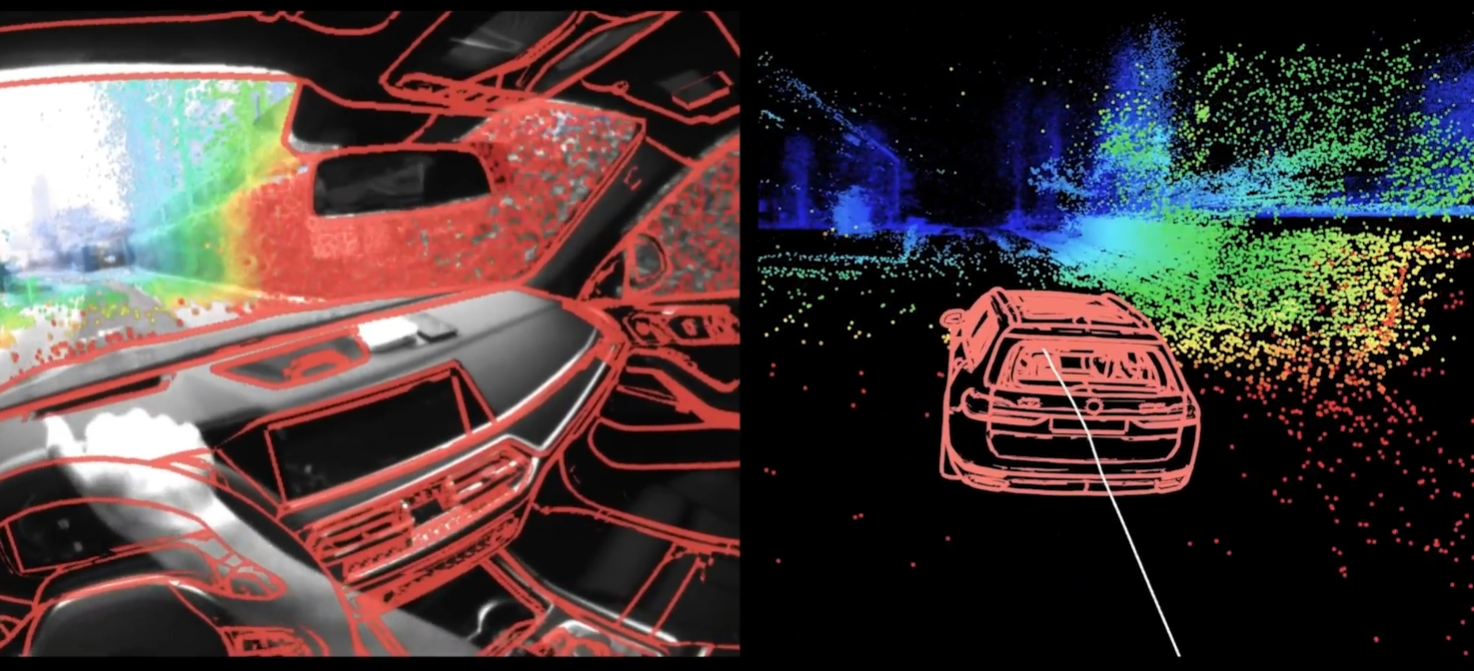

Modelling Reality for Perception & Action in Dynamic Environments

A world-wide shared, dynamically updating, physically predictive model of reality… that is built and kept up-to-date with reality by our our mobile observing devices.. Is a key to enabling super-human ultra-low power robotic and AI/AR perception in our world.

Why? Why Shared? Why Physically Predictive? Predict What if.. Building a model of reality that can predict sensor observations given actions provides a foundation for a [task free] representation on which AI and Robotics applications can be built with higher performance, lower power and trust guarantees to users.

How is Semantic Free possible? Currently something of chicken-and-egg problem-how to represent what’s valuable in a dynamically updating model?

Completeness, Consistency, Privacy,...,? What is the granularity of representation required? It is made easier to state if you have a specific task to solve.

Predictive Models of Reality?

Key Research Areas

Devices

Location

Providing always-available-anywhere high accuracy and precision location.

Physical Limits on mobile sensing compute and communication.

Index

Represent and synchronise the predictive model to reality.

Devices that build a map of reality

-

Satalites

-

Aerial & Cars

-

Backpacks

Represent and synchronise the predictive model to reality.

Devices that Build a Map of Reality

Satalites

Aerial & Cars

Backpacks

Overview

-

Project Aria

Research devices to enable dynamic ego-centric data capture in diverse real-world settings.

-

Vision-Less Tracking

IMU-only navigation with deep learning enabling robustness in highly dynamic environments.

-

Towards Predictive Models

Neural 3D video Synthesis and STaR: Self-supervised tracking and reconstruction.

All day wearable computer

All day usage

<70g

2.5Wh battery

30 hrs standby

1.5 hours of continuous recording time

Mobile

Multi Core Snapdragon Processor

128GB storage

4GB RAM

Connectivity

WiFi 802.11.a/b/g/n/ac

Bluetooth 5.0

LiveMaps

What matters in tech? Stepping back from the noise, I work out what’s really happening, what matters, and what it might mean.

“[…] if you were supplied with a computer… that worked all day long and was instantly responsive to every action you had. How much value could you derive from that?”

DOUG ENGELBART - 1968

Wearable Rendering Surface

Dream it

Build it

Grow it

“[...] if you were supplied with a computer… that worked all day long and was instantly responsive to every action that you had..”

…now AI is here

AI is here - but there is a clear gap in its capabilities today.

A physically predictive, live, and updated representation of the physical environment is built by the observation of the devices that use it.

ALL-DAY CONTEXT

ALL-DAY WEARABLE

ALWAYS-ON MACHINE PERCEPTION

REASONING (PREDICTION)

CONTEXTUAL AI = CONTEXT + AI

PHYSICAL + DIGITAL REALITY

Connecting Spatial Computing to the Physical World

Goals

Demonstrate the value of a fully indexed environment by showing how LiveMaps can be used to interface with the physical world.

Demonstrate LiveMaps' technical state and the path that will help us practically achieve Goal #1 in a consumer product.

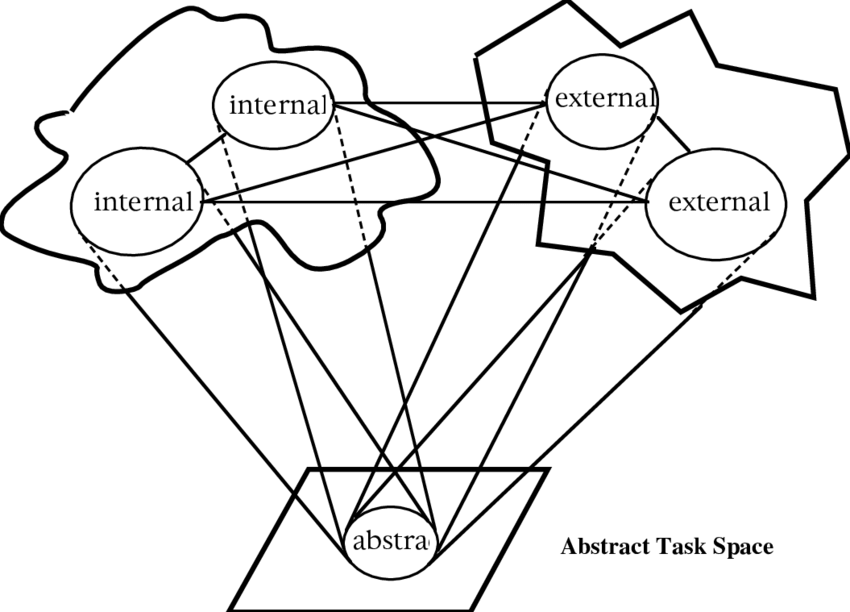

Representation in Distributed Cognitive Task

This project is focused on representational issues in distributed cognitive tasks that require the processing of information distributed across the internal mind and the external environment. It focuses on three problems.

Focusing on Three Problems

The Distributed Representation of Information.

The Interaction between Internal and External Representation.

The Nature of External Representations

Blurring the physical and the Digital.

The future of computation in Extending and Augmenting Human Capabilities

Through the Sensing and Actuation of Objects and Environments.

Representation in Distributed Cognitive Tasks.

Everyday Cognition

But I’m not only interested in paper as a design surface. Before screens, paper was just one way that we solved design problems. So, what do we do with the physical world?

Representation in Distributed Cognitive Tasks.

Everyday Cognition

I’m inspired by this paper that Don Norman put out in the early 90s about the towers of Hanoi. In it, he talks about how you can embed information within objects. They invented three games that embedded more and more of the rules or the cognition of the game into the physical world.

The towers of hanoi game. A player must get all the disks onto the rightmost empty pole by only moving disks one at a time between the three poles.

Tower of Hanoi

Focusing on Three Problems

In the towers of Hanoi, you have to move the doughnuts over on at a time, and you can never put a bigger doughnut on top of a smaller doughnut

Regarding coffee cups, there’s a lot that tells you not to put a small coffee into a full cup because you’ll spill coffee everywhere. So, to me, this kind of frames up this challenge in a precise way about the value of putting information into the physical world.

Animation of an iterative algorithm-solving 6-disk problem.

The Brief: Unlocks a new category of ‘contextualised query’

Make any object ‘smart’ e.g. ‘What’s in my fridge?’

Change the meaning of a query according to spatial context e.g. ‘Turn On’

Mark a specific query using spatial context e.g. ‘Turn on the light above the cot’

Unlock new object interfaces e.g. when I leave the room, turn off the lights

The ultimate ‘If this, then that’

Demonstrate possible ways to set up this rule:

When you sit on the sofa with a book, the lamp turns on.

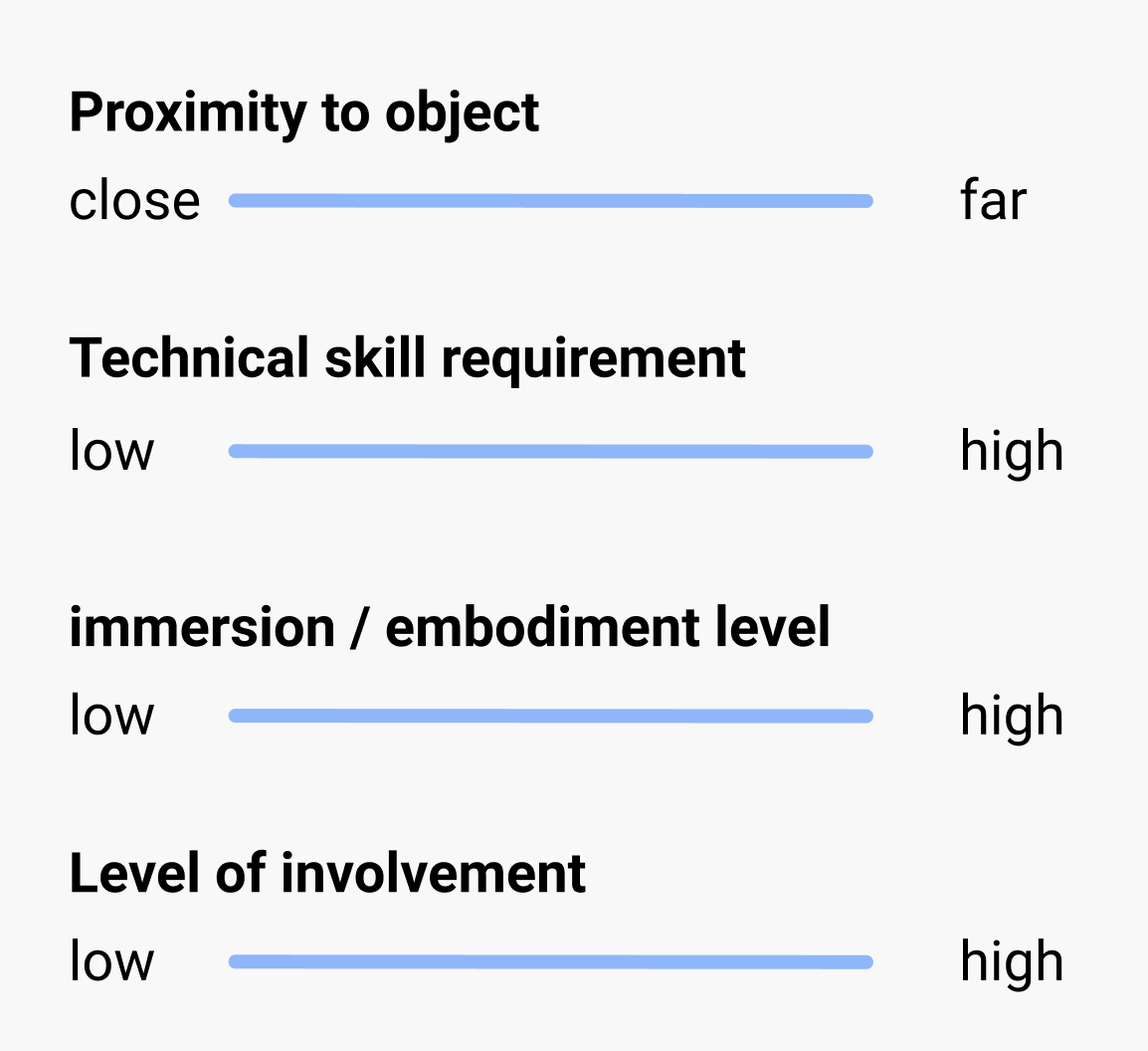

Dimensions

Technical skill requirements vs No technical skill requirements

Close physical proximity vs Far physical proximity

Immersive vs Non-Immersive (refers to embodiment)

Fine-grained control vs less fine-grained control

High involvement vs. low friction (level of involvement)

Assisted creation vs non-assisted creation

The following 3 pages simply depict the scenario,

When you sit on the sofa with a book, the lamp turns on.

The following pages explore a breadth

of ways, one could create this routine.

Standard Text Based Coding

Time Machine recognises a user pattern and suggests turning it into a routine via

a smartwatch notification

Standard text based coding

Coding through a 3D Engine

Coding using an AR Dolls house

Coding by performing a routine and recording it

Time Machine recognises a user pattern and suggests turning it into a routine

via spatial sound (voice).

Coding by Drawing Connections

Coding using physical blocks with AR Labelling

Coding by drawing Connections

Time Machine recognises a user pattern and suggests turning it into a routine

via spatial sound (voice).